PyTorch: Deep Learning and Artificial Intelligence

Lazy Programmer Inc.,Lazy Programmer Team

24:18:06

Description

Neural Networks for Computer Vision, Time Series Forecasting, NLP, GANs, Reinforcement Learning, and More!

What You'll Learn?

- Artificial Neural Networks (ANNs) / Deep Neural Networks (DNNs)

- Predict Stock Returns

- Time Series Forecasting

- Computer Vision

- How to build a Deep Reinforcement Learning Stock Trading Bot

- GANs (Generative Adversarial Networks)

- Recommender Systems

- Image Recognition

- Convolutional Neural Networks (CNNs)

- Recurrent Neural Networks (RNNs)

- Natural Language Processing (NLP) with Deep Learning

- Demonstrate Moore's Law using Code

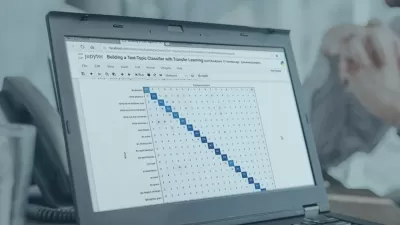

- Transfer Learning to create state-of-the-art image classifiers

Who is this for?

What You Need to Know?

More details

DescriptionWelcome to PyTorch:Â Deep Learning and Artificial Intelligence!

Although Google's Deep Learning library Tensorflow has gained massive popularity over the past few years, PyTorch has been the library of choice for professionals and researchers around the globe for deep learning and artificial intelligence.

Is it possible that Tensorflow is popular only because Google is popular and used effective marketing?

Why did Tensorflow change so significantly between version 1 and version 2? Was there something deeply flawed with it, and are there still potential problems?

It is less well-known that PyTorch is backed by another Internet giant, Facebook (specifically, the Facebook AIÂ Research Lab - FAIR). So if you want a popular deep learning library backed by billion dollar companies and lots of community support, you can't go wrong with PyTorch. And maybe it's a bonus that the library won't completely ruin all your old code when it advances to the next version. ;)

On the flip side, it is very well-known that all the top AI shops (ex. OpenAI, Apple, and JPMorgan Chase) use PyTorch. OpenAI just recently switched to PyTorch in 2020, a strong sign that PyTorch is picking up steam.

If you are a professional, you will quickly recognize that building and testing new ideas is extremely easy with PyTorch, while it can be pretty hard in other libraries that try to do everything for you. Oh, and it's faster.

Deep Learning has been responsible for some amazing achievements recently, such as:

Generating beautiful, photo-realistic images of people and things that never existed (GANs)

Beating world champions in the strategy game Go, and complex video games like CS:GO and Dota 2 (Deep Reinforcement Learning)

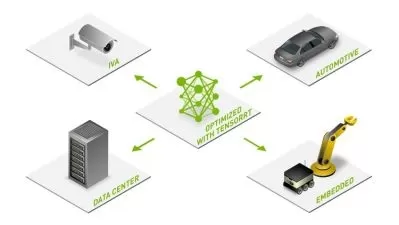

Self-driving cars (Computer Vision)

Speech recognition (e.g. Siri) and machine translation (Natural Language Processing)

Even creating videos of people doing and saying things they never did (DeepFakes - a potentially nefarious application of deep learning)

This course is for beginner-level students all the way up to expert-level students. How can this be?

If you've just taken my free Numpy prerequisite, then you know everything you need to jump right in. We will start with some very basic machine learning models and advance to state of the art concepts.

Along the way, you will learn about all of the major deep learning architectures, such as Deep Neural Networks, Convolutional Neural Networks (image processing), and Recurrent Neural Networks (sequence data).

Current projects include:

Natural Language Processing (NLP)

Recommender Systems

Transfer Learning for Computer Vision

Generative Adversarial Networks (GANs)

Deep Reinforcement Learning Stock Trading Bot

Even if you've taken all of my previous courses already, you will still learn about how to convert your previous code so that it uses PyTorch, and there are all-new and never-before-seen projects in this course such as time series forecasting and how to do stock predictions.

This course is designed for students who want to learn fast, but there are also "in-depth" sections in case you want to dig a little deeper into the theory (like what is a loss function, and what are the different types of gradient descent approaches).

I'm taking the approach that even if you are not 100% comfortable with the mathematical concepts, you can still do this! In this course, we focus more on the PyTorch library, rather than deriving any mathematical equations. IÂ have tons of courses for that already, so there is no need to repeat that here.

Instructor's Note: This course focuses on breadth rather than depth, with less theory in favor of building more cool stuff. If you are looking for a more theory-dense course, this is not it. Generally, for each of these topics (recommender systems, natural language processing, reinforcement learning, computer vision, GANs, etc.) I already have courses singularly focused on those topics.

Thanks for reading, and I’ll see you in class!

WHATÂ ORDERÂ SHOULDÂ IÂ TAKEÂ YOURÂ COURSESÂ IN?:

Check out the lecture "Machine Learning and AIÂ Prerequisite Roadmap" (available in the FAQ of any of my courses, including the free Numpy course)

UNIQUEÂ FEATURES

Every line of code explained in detail - email me any time if you disagree

No wasted time "typing" on the keyboard like other courses - let's be honest, nobody can really write code worth learning about in just 20 minutes from scratch

Not afraid of university-level math - get important details about algorithms that other courses leave out

Who this course is for:

- Beginners to advanced students who want to learn about deep learning and AI in PyTorch

Welcome to PyTorch:Â Deep Learning and Artificial Intelligence!

Although Google's Deep Learning library Tensorflow has gained massive popularity over the past few years, PyTorch has been the library of choice for professionals and researchers around the globe for deep learning and artificial intelligence.

Is it possible that Tensorflow is popular only because Google is popular and used effective marketing?

Why did Tensorflow change so significantly between version 1 and version 2? Was there something deeply flawed with it, and are there still potential problems?

It is less well-known that PyTorch is backed by another Internet giant, Facebook (specifically, the Facebook AIÂ Research Lab - FAIR). So if you want a popular deep learning library backed by billion dollar companies and lots of community support, you can't go wrong with PyTorch. And maybe it's a bonus that the library won't completely ruin all your old code when it advances to the next version. ;)

On the flip side, it is very well-known that all the top AI shops (ex. OpenAI, Apple, and JPMorgan Chase) use PyTorch. OpenAI just recently switched to PyTorch in 2020, a strong sign that PyTorch is picking up steam.

If you are a professional, you will quickly recognize that building and testing new ideas is extremely easy with PyTorch, while it can be pretty hard in other libraries that try to do everything for you. Oh, and it's faster.

Deep Learning has been responsible for some amazing achievements recently, such as:

Generating beautiful, photo-realistic images of people and things that never existed (GANs)

Beating world champions in the strategy game Go, and complex video games like CS:GO and Dota 2 (Deep Reinforcement Learning)

Self-driving cars (Computer Vision)

Speech recognition (e.g. Siri) and machine translation (Natural Language Processing)

Even creating videos of people doing and saying things they never did (DeepFakes - a potentially nefarious application of deep learning)

This course is for beginner-level students all the way up to expert-level students. How can this be?

If you've just taken my free Numpy prerequisite, then you know everything you need to jump right in. We will start with some very basic machine learning models and advance to state of the art concepts.

Along the way, you will learn about all of the major deep learning architectures, such as Deep Neural Networks, Convolutional Neural Networks (image processing), and Recurrent Neural Networks (sequence data).

Current projects include:

Natural Language Processing (NLP)

Recommender Systems

Transfer Learning for Computer Vision

Generative Adversarial Networks (GANs)

Deep Reinforcement Learning Stock Trading Bot

Even if you've taken all of my previous courses already, you will still learn about how to convert your previous code so that it uses PyTorch, and there are all-new and never-before-seen projects in this course such as time series forecasting and how to do stock predictions.

This course is designed for students who want to learn fast, but there are also "in-depth" sections in case you want to dig a little deeper into the theory (like what is a loss function, and what are the different types of gradient descent approaches).

I'm taking the approach that even if you are not 100% comfortable with the mathematical concepts, you can still do this! In this course, we focus more on the PyTorch library, rather than deriving any mathematical equations. IÂ have tons of courses for that already, so there is no need to repeat that here.

Instructor's Note: This course focuses on breadth rather than depth, with less theory in favor of building more cool stuff. If you are looking for a more theory-dense course, this is not it. Generally, for each of these topics (recommender systems, natural language processing, reinforcement learning, computer vision, GANs, etc.) I already have courses singularly focused on those topics.

Thanks for reading, and I’ll see you in class!

WHATÂ ORDERÂ SHOULDÂ IÂ TAKEÂ YOURÂ COURSESÂ IN?:

Check out the lecture "Machine Learning and AIÂ Prerequisite Roadmap" (available in the FAQ of any of my courses, including the free Numpy course)

UNIQUEÂ FEATURES

Every line of code explained in detail - email me any time if you disagree

No wasted time "typing" on the keyboard like other courses - let's be honest, nobody can really write code worth learning about in just 20 minutes from scratch

Not afraid of university-level math - get important details about algorithms that other courses leave out

Who this course is for:

- Beginners to advanced students who want to learn about deep learning and AI in PyTorch

User Reviews

Rating

Lazy Programmer Inc.

Instructor's CoursesLazy Programmer Team

Instructor's Courses

Udemy

View courses Udemy- language english

- Training sessions 150

- duration 24:18:06

- English subtitles has

- Release Date 2023/12/16