Processing Streaming Data with Apache Spark on Databricks

Janani Ravi

2:00:53

Description

This course will teach you how to use Spark abstractions for streaming data and perform transformations on streaming data using the Spark structured streaming APIs on Azure Databricks.

What You'll Learn?

Structured streaming in Apache Spark treats real-time data as a table that is being constantly appended. This leads to a stream processing model that uses the same APIs as a batch processing model - it is up to Spark to incrementalize our batch operations to work on the stream. The burden of stream processing shifts from the user to the system, making it very easy and intuitive to process streaming data with Spark.

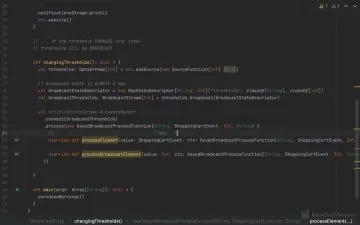

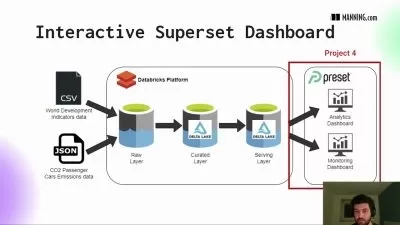

In this course, Processing Streaming Data with Apache Spark on Databricks, you’ll learn to stream and process data using abstractions provided by Spark structured streaming. First, you’ll understand the difference between batch processing and stream processing and see the different models that can be used to process streaming data. You will also explore the structure and configurations of the Spark structured streaming APIs.

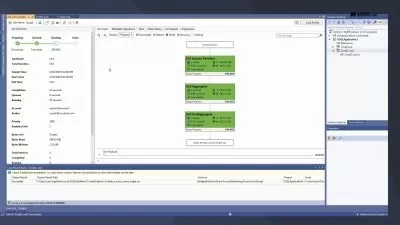

Next, you will learn how to read from a streaming source using Auto Loader on Azure Databricks. Auto Loader automates the process of reading streaming data from a file system, and takes care of the file management and tracking of processed files making it very easy to ingest data from external cloud storage sources. You will then perform transformations and aggregations on streaming data and write data out to storage using the append, complete, and update models.

Finally, you will learn how to use SQL-like abstractions on input streams. You will connect to an external cloud storage source, an Amazon S3 bucket, and read in your stream using Auto Loader. You will then run SQL queries to process your data. Along the way, you will make your stream processing resilient to failures using checkpointing and you will also implement your stream processing operation as a job on a Databricks Job Cluster.

When you’re finished with this course, you’ll have the skills and knowledge of streaming data in Spark needed to process and monitor streams and identify use-cases for transformations on streaming data.

More details

User Reviews

Rating

Janani Ravi

Instructor's Courses

Pluralsight

View courses Pluralsight- language english

- Training sessions 32

- duration 2:00:53

- level average

- Release Date 2023/12/15