01.01-introduction to the course.mp4

00:55

01.02-introduction to the instructor.mp4

05:44

01.03-introduction to the co-instructor.mp4

00:34

01.04-course introduction.mp4

11:16

02.01-what is regular expression.mp4

05:56

02.02-why regular expression.mp4

06:31

02.03-eliza chatbot.mp4

04:23

02.04-python regular expression package.mp4

04:24

03.01-metacharacters.mp4

02:27

03.02-metacharacters bigbrackets exercise.mp4

04:19

03.03-meta characters bigbrackets exercise solution.mp4

03:59

03.04-metacharacters bigbrackets exercise 2.mp4

02:11

03.05-metacharacters bigbrackets exercise 2-solution.mp4

04:37

03.06-metacharacters cap.mp4

03:18

03.07-metacharacters cap exercise 3.mp4

02:03

03.08-metacharacters cap exercise 3-solution.mp4

04:44

03.09-backslash.mp4

05:02

03.10-backslash continued.mp4

07:59

03.11-backslash continued-01.mp4

03:51

03.12-backslash squared brackets exercise.mp4

01:38

03.13-backslash squared brackets exercise solution.mp4

03:58

03.14-backslash squared brackets exercise-another solution.mp4

04:43

03.15-backslash exercise.mp4

01:43

03.16-backslash exercise solution and special sequences exercise.mp4

05:12

03.17-solution and special sequences exercise solution.mp4

04:57

03.18-metacharacter asterisk.mp4

05:18

03.19-metacharacter asterisk exercise.mp4

04:53

03.20-metacharacter asterisk exercise solution.mp4

05:04

03.21-metacharacter asterisk homework.mp4

04:28

03.22-metacharacter asterisk greedy matching.mp4

05:54

03.23-metacharacter plus and question mark.mp4

05:46

03.24-metacharacter curly brackets exercise.mp4

03:25

03.25-metacharacter curly brackets exercise solution.mp4

05:18

04.01-pattern objects.mp4

05:05

04.02-pattern objects match method exercise.mp4

02:43

04.03-pattern objects match method exercise solution.mp4

07:11

04.04-pattern objects match method versus search method.mp4

05:18

04.05-pattern objects finditer method.mp4

03:20

04.06-pattern objects finditer method exercise solution.mp4

06:22

05.01-metacharacters logical or.mp4

06:28

05.02-metacharacters beginning and end patterns.mp4

04:57

05.03-metacharacters parentheses.mp4

07:03

06.01-string modification.mp4

03:49

06.02-word tokenizer using split method.mp4

04:34

06.03-sub method exercise.mp4

04:44

06.04-sub method exercise solution.mp4

04:30

07.01-what is a word.mp4

04:41

07.02-definition of word is task dependent.mp4

05:20

07.03-vocabulary and corpus.mp4

05:38

07.04-tokens.mp4

03:14

07.05-tokenization in spacy.mp4

09:10

08.01-yelp reviews classification mini project introduction.mp4

05:35

08.02-yelp reviews classification mini project vocabulary initialization.mp4

06:48

08.03-yelp reviews classification mini project adding tokens to vocabulary.mp4

04:54

08.04-yelp reviews classification mini project look up functions in vocabulary.mp4

05:57

08.05-yelp reviews classification mini project building vocabulary from data.mp4

09:16

08.06-yelp reviews classification mini project one-hot encoding.mp4

06:51

08.07-yelp reviews classification mini project one-hot encoding implementation.mp4

09:47

08.08-yelp reviews classification mini project encoding documents.mp4

06:50

08.09-yelp reviews classification mini project encoding documents implementation.mp4

06:28

08.10-yelp reviews classification mini project train test splits.mp4

03:41

08.11-yelp reviews classification mini project feature computation.mp4

06:08

08.12-yelp reviews classification mini project classification.mp4

10:45

09.01-tokenization in detial introduction.mp4

04:28

09.02-tokenization is hard.mp4

05:16

09.03-tokenization byte pair encoding.mp4

06:08

09.04-tokenization byte pair encoding example.mp4

08:33

09.05-tokenization byte pair encoding on test data.mp4

07:14

09.06-tokenization byte pair encoding implementation get pair counts.mp4

08:11

09.07-tokenization byte pair encoding implementation merge in corpus.mp4

07:49

09.08-tokenization byte pair encoding implementation bfe training.mp4

06:10

09.09-tokenization byte pair encoding implementation bfe encoding.mp4

06:25

09.10-tokenization byte pair encoding implementation bfe encoding one pair.mp4

09:11

09.11-tokenization byte pair encoding implementation bfe encoding one pair 1.mp4

07:53

10.01-word normalization case folding.mp4

04:43

10.02-word normalization lemmatization.mp4

06:48

10.03-word normalization stemming.mp4

02:31

10.04-word normalization sentence segmentation.mp4

06:27

11.01-spelling correction minimum edit distance introduction.mp4

07:59

11.02-spelling correction minimum edit distance example.mp4

09:04

11.03-spelling correction minimum edit distance table filling.mp4

09:10

11.04-spelling correction minimum edit distance dynamic programming.mp4

07:17

11.05-spelling correction minimum edit distance pseudocode.mp4

03:55

11.06-spelling correction minimum edit distance implementation.mp4

06:57

11.07-spelling correction minimum edit distance implementation bug fixing.mp4

02:40

11.08-spelling correction implementation.mp4

07:42

12.01-what is a language model.mp4

05:50

12.02-language model formal definition.mp4

06:31

12.03-language model curse of dimensionality.mp4

04:27

12.04-language model markov assumption and n-grams.mp4

07:06

12.05-language model implementation setup.mp4

04:24

12.06-language model implementation n-grams function.mp4

08:42

12.07-language model implementation update counts function.mp4

05:46

12.08-language model implementation probability model function.mp4

06:35

12.09-language model implementation reading corpus.mp4

12:17

12.10-language model implementation sampling text.mp4

18:17

13.01-one-hot vectors.mp4

04:10

13.02-one-hot vectors implementation.mp4

05:41

13.03-one-hot vectors limitations.mp4

04:45

13.04-one-hot vectors used as target labeling.mp4

03:42

13.05-term frequency for document representations.mp4

03:12

13.06-term frequency for document representations implementations.mp4

05:31

13.07-term frequency for word representations.mp4

05:17

13.08-tfidf for document representations.mp4

05:01

13.09-tfidf for document representations implementation reading corpus.mp4

04:24

13.10-tfidf for document representations implementation computing document frequency.mp4

04:59

13.11-tfidf for document representations implementation computing tfidf.mp4

07:22

13.12-topic modeling with tfidf 1.mp4

04:20

13.13-topic modeling with tfidf 2.mp4

04:27

13.14-topic modeling with tfidf 3.mp4

04:51

13.15-topic modeling with tfidf 4.mp4

04:40

13.16-topic modeling with gensim.mp4

13:27

14.01-word co-occurrence matrix.mp4

06:25

14.02-word co-occurrence matrix versus document-term matrix.mp4

05:47

14.03-word co-occurrence matrix implementation preparing data.mp4

05:08

14.04-word co-occurrence matrix implementation preparing data 2.mp4

04:12

14.05-word co-occurrence matrix implementation preparing data getting vocabulary.mp4

04:26

14.06-word co-occurrence matrix implementation final function.mp4

11:37

14.07-word co-occurrence matrix implementation handling memory issues on large corpora.mp4

09:19

14.08-word co-occurrence matrix sparsity.mp4

05:19

14.09-word co-occurrence matrix positive point wise mutual information ppmi.mp4

07:58

14.10-pca for dense embeddings.mp4

04:09

14.11-latent semantic analysis.mp4

04:25

14.12-latent semantic analysis implementation.mp4

06:50

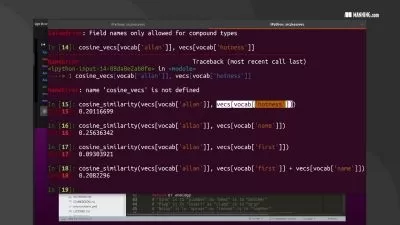

15.01-cosine similarity.mp4

07:05

15.02-cosine similarity getting norms of vectors.mp4

09:18

15.03-cosine similarity normalizing vectors.mp4

06:36

15.04-cosine similarity with more than one vector.mp4

11:00

15.05-cosine similarity getting most similar words in the vocabulary.mp4

10:05

15.06-cosine similarity getting most similar words in the vocabulary fixing bug.mp4

06:23

15.07-cosine similarity word2vec embeddings.mp4

08:21

15.08-word analogies.mp4

04:50

15.09-words analogies implementation 1.mp4

05:55

15.10-word analogies implementation 2.mp4

06:13

15.11-word visualizations.mp4

02:16

15.12-word visualizations implementation.mp4

04:28

15.13-word visualizations implementation 2.mp4

06:37

16.01-static and dynamic embeddings.mp4

06:17

16.02-self supervision.mp4

04:23

16.03-word2vec algorithm abstract.mp4

05:21

16.04-word2vec why negative sampling.mp4

03:49

16.05-word2vec what is skip gram.mp4

04:43

16.06-word2vec how to define probability law.mp4

04:00

16.07-word2vec sigmoid.mp4

05:21

16.08-word2vec formalizing loss function.mp4

05:35

16.09-word2vec loss function.mp4

03:25

16.10-word2vec gradient descent step.mp4

04:21

16.11-word2vec implementation preparing data.mp4

10:37

16.12-word2vec implementation gradient step.mp4

07:18

16.13-word2vec implementation driver function.mp4

13:38

17.01-why rnns for nlp.mp4

13:25

17.02-pytorch installation and tensors introduction.mp4

10:32

17.03-automatic differentiation pytorch.mp4

08:26

18.01-why dnns in machine learning.mp4

04:13

18.02-representational power and data utilization capacity of dnn.mp4

07:13

18.03-perceptron.mp4

05:08

18.04-perceptron implementation.mp4

07:26

18.05-dnn architecture.mp4

03:52

18.06-dnn forwardstep implementation.mp4

08:21

18.07-dnn why activation function is required.mp4

04:47

18.08-dnn properties of activation function.mp4

06:04

18.09-dnn activation functions in pytorch.mp4

03:49

18.10-dnn what is loss function.mp4

07:10

18.11-dnn loss function in pytorch.mp4

05:45

19.01-dnn gradient descent.mp4

07:07

19.02-dnn gradient descent implementation.mp4

05:58

19.03-dnn gradient descent stochastic batch minibatch.mp4

06:51

19.04-dnn gradient descent summary.mp4

02:37

19.05-dnn implementation gradient step.mp4

04:02

19.06-dnn implementation stochastic gradient descent.mp4

13:53

19.07-dnn implementation batch gradient descent.mp4

06:46

19.08-dnn implementation minibatch gradient descent.mp4

09:04

19.09-dnn implementation in pytorch.mp4

15:19

20.01-dnn weights initializations.mp4

04:35

20.02-dnn learning rate.mp4

04:03

20.03-dnn batch normalization.mp4

02:05

20.04-dnn batch normalization implementation.mp4

02:41

20.05-dnn optimizations.mp4

04:08

20.06-dnn dropout.mp4

03:58

20.07-dnn dropout in pytorch.mp4

02:03

20.08-dnn early stopping.mp4

03:34

20.09-dnn hyperparameters.mp4

03:33

20.10-dnn pytorch cifar10 example.mp4

15:46

21.01-what is rnn.mp4

04:54

21.02-understanding rnn with a simple example.mp4

08:29

21.03-rnn applications human activity recognition.mp4

02:53

21.04-rnn applications image captioning.mp4

02:37

21.05-rnn applications machine translation.mp4

03:16

21.06-rnn applications speech recognition stock price prediction.mp4

04:04

21.07-rnn models.mp4

07:07

22.01-language modeling next word prediction.mp4

03:43

22.02-language modeling next word prediction vocabulary index.mp4

04:04

22.03-language modeling next word prediction vocabulary index embeddings.mp4

03:16

22.04-language modeling next word prediction rnn architecture.mp4

04:08

22.05-language modeling next word prediction python 1.mp4

07:12

22.06-language modeling next word prediction python 2.mp4

09:02

22.07-language modeling next word prediction python 3.mp4

07:27

22.08-language modeling next word prediction python 4.mp4

05:33

22.09-language modeling next word prediction python 5.mp4

04:35

22.10-language modeling next word prediction python 6.mp4

13:34

23.01-vocabulary implementation.mp4

09:46

23.02-vocabulary implementation helpers.mp4

05:49

23.03-vocabulary implementation from file.mp4

06:26

23.04-vectorizer.mp4

05:17

23.05-rnn setup.mp4

07:20

23.06-rnn setup 1.mp4

21:23

24.01-rnn in pytorch introduction.mp4

02:04

24.02-rnn in pytorch embedding layer.mp4

07:21

24.03-rnn in pytorch nn rnn.mp4

08:47

24.04-rnn in pytorch output shapes.mp4

04:11

24.05-rnn in pytorch gated units.mp4

03:39

24.06-rnn in pytorch gated units gru lstm.mp4

03:55

24.07-rnn in pytorch bidirectional rnn.mp4

02:44

24.08-rnn in pytorch bidirectional rnn output shapes.mp4

04:26

24.09-rnn in pytorch bidirectional rnn output shapes separation.mp4

04:33

24.10-rnn in pytorch example.mp4

09:48

25.01-rnn encoder decoder.mp4

03:01

25.02-rnn attention.mp4

03:28

26.01-introduction to dataset and packages.mp4

05:10

26.02-implementing language class.mp4

05:22

26.03-testing language class and implementing normalization.mp4

09:44

26.04-reading datafile.mp4

05:17

26.05-reading building vocabulary.mp4

07:56

26.06-encoderrnn.mp4

06:14

26.07-decoderrnn.mp4

06:13

26.08-decoderrnn forward step.mp4

12:08

26.09-decoderrnn helper functions.mp4

04:54

26.10-training module.mp4

13:31

26.11-stochastic gradient descent.mp4

07:12

26.12-nmt training.mp4

05:06

26.13-nmt evaluation.mp4

11:13

9781803249193 Code.zip