Natural Language Processing in Action

Focused View

17:24:59

65 View

00001 Part 1. Wordy machines.mp4

01:36

00002 Natural language vs. programming language.mp4

03:58

00003 The magic.mp4

05:58

00004 The math.mp4

05:45

00005 Practical applications.mp4

05:17

00006 Language through a computer s eyes.mp4

09:20

00007 A simple chatbot.mp4

09:18

00008 Another way.mp4

10:50

00009 A brief overflight of hyperspace.mp4

06:42

00010 Word order and grammar.mp4

04:28

00011 A chatbot natural language pipeline.mp4

06:25

00012 Processing in depth.mp4

06:53

00013 Natural language IQ.mp4

07:30

00014 Challenges a preview of stemming.mp4

09:29

00015 Building your vocabulary with a tokenizer Part 1.mp4

12:11

00016 Building your vocabulary with a tokenizer Part 2.mp4

10:11

00017 Dot product.mp4

04:13

00018 A token improvement.mp4

11:26

00019 Extending your vocabulary with n-grams Part 1.mp4

08:20

00020 Extending your vocabulary with n-grams Part 2.mp4

07:15

00021 Normalizing your vocabulary Part 1.mp4

09:11

00022 Normalizing your vocabulary Part 2.mp4

07:56

00023 Normalizing your vocabulary Part 3.mp4

08:53

00024 Sentiment.mp4

06:21

00025 VADER A rule-based sentiment analyzer.mp4

06:37

00026 Math with words TF-IDF vectors.mp4

04:07

00027 Bag of words.mp4

08:05

00028 Vectorizing.mp4

03:53

00029 Vector spaces.mp4

11:49

00030 Zipf s Law.mp4

05:09

00031 Topic modeling.mp4

08:31

00032 Relevance ranking.mp4

07:42

00033 Okapi BM25.mp4

04:13

00034 From word counts to topic scores.mp4

04:52

00035 TF-IDF vectors and lemmatization.mp4

09:01

00036 Thought experiment.mp4

10:44

00037 An algorithm for scoring topics.mp4

05:51

00038 An LDA classifier.mp4

09:30

00039 Latent semantic analysis.mp4

07:54

00040 Your thought experiment made real.mp4

06:55

00041 Singular value decomposition.mp4

06:42

00042 U left singular vectors.mp4

06:05

00043 SVD matrix orientation.mp4

06:26

00044 Principal component analysis.mp4

07:36

00045 Stop horsing around and get back to NLP.mp4

09:22

00046 Using truncated SVD for SMS message semantic analysis.mp4

10:10

00047 Latent Dirichlet allocation LDiA.mp4

10:28

00048 LDiA topic model for SMS messages.mp4

12:26

00049 Distance and similarity.mp4

07:25

00050 Steering with feedback.mp4

06:05

00051 Topic vector power.mp4

04:46

00052 Semantic search.mp4

08:37

00053 Part 2. Deeper learning neural networks.mp4

02:37

00054 Neural networks the ingredient list.mp4

08:54

00055 Detour through bias Part 1.mp4

09:54

00056 Detour through bias Part 2.mp4

08:47

00057 Detour through bias Part 3.mp4

11:00

00058 Let s go skiing the error surface.mp4

07:56

00059 Keras - Neural networks in Python.mp4

08:53

00060 Semantic queries and analogies.mp4

07:13

00061 Word vectors.mp4

10:04

00062 Vector-oriented reasoning.mp4

07:12

00063 How to compute Word2vec representations Part 1.mp4

09:31

00064 How to compute Word2vec representations Part 2.mp4

08:37

00065 How to use the gensim.word2vec module.mp4

05:08

00066 How to generate your own word vector representations.mp4

07:15

00067 fastText.mp4

05:42

00068 Visualizing word relationships.mp4

10:29

00069 Unnatural words.mp4

05:53

00070 Learning meaning.mp4

07:52

00071 Toolkit.mp4

03:02

00072 Convolutional neural nets.mp4

06:41

00073 Padding.mp4

05:43

00074 Narrow windows indeed.mp4

03:22

00075 Implementation in Keras - prepping the data.mp4

08:14

00076 Convolutional neural network architecture.mp4

09:34

00077 The cherry on the sundae.mp4

09:04

00078 Using the model in a pipeline.mp4

06:51

00079 Loopy recurrent neural networks RNNs.mp4

03:59

00080 Remembering with recurrent networks.mp4

08:03

00081 Backpropagation through time.mp4

09:30

00082 Recap.mp4

10:06

00083 Putting things together.mp4

05:29

00084 Hyperparameters.mp4

05:30

00085 Predicting.mp4

08:16

00086 LSTM Part 1.mp4

09:47

00087 LSTM Part 2.mp4

08:37

00088 Backpropagation through time.mp4

09:31

00089 Back to the dirty data.mp4

09:09

00090 My turn to chat.mp4

03:54

00091 My turn to speak more clearly.mp4

10:56

00092 Learned how to say but not yet what.mp4

05:10

00093 Encoder-decoder architecture.mp4

04:57

00094 Decoding thought.mp4

05:19

00095 Look familiar.mp4

08:06

00096 Assembling a sequence-to-sequence pipeline.mp4

05:06

00097 Sequence encoder.mp4

05:51

00098 Training the sequence-to-sequence network.mp4

03:52

00099 Building a chatbot using sequence-to-sequence networks.mp4

07:36

00100 Enhancements.mp4

05:12

00101 In the real world.mp4

05:31

00102 Part 3. Getting real real-world NLP challenges.mp4

00:55

00103 Named entities and relations.mp4

03:00

00104 A knowledge base.mp4

09:10

00105 Regular patterns.mp4

08:53

00106 Information worth extracting.mp4

02:46

00107 Extracting dates.mp4

09:11

00108 Extracting relationships relations.mp4

09:36

00109 Relation normalization and extraction.mp4

08:12

00110 Why won t split . work.mp4

08:35

00111 Language skill.mp4

06:40

00112 Modern approaches Part 1.mp4

09:35

00113 Modern approaches Part 2.mp4

09:16

00114 Pattern-matching approach.mp4

05:13

00115 A pattern-matching chatbot with AIML Part 1.mp4

04:01

00116 A pattern-matching chatbot with AIML Part 2.mp4

09:46

00117 Grounding.mp4

06:31

00118 Retrieval search.mp4

08:18

00119 Example retrieval-based chatbot.mp4

10:21

00120 Generative models.mp4

08:02

00121 Four-wheel drive.mp4

03:19

00122 Design process.mp4

05:38

00123 Trickery.mp4

09:04

00124 Too much of a good thing data.mp4

03:14

00125 Optimizing NLP algorithms.mp4

06:45

00126 Advanced indexing.mp4

07:06

00127 Advanced indexing with Annoy.mp4

05:17

00128 Why use approximate indexes at all.mp4

05:50

00129 Constant RAM algorithms.mp4

05:34

00130 Parallelizing your NLP computations.mp4

08:28

00131 Reducing the memory footprint during model training.mp4

07:27

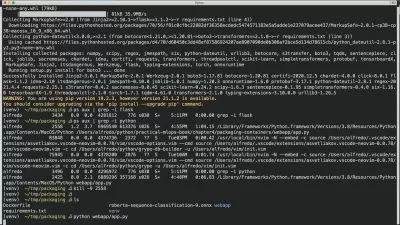

00132 Anaconda3.mp4

07:05

00133 Mac.mp4

07:19

00134 Working with strings.mp4

05:59

00135 Regular expressions.mp4

09:18

00136 Vectors.mp4

08:06

00137 Distances Part 2.mp4

04:49

00138 Data selection and avoiding bias.mp4

06:50

00139 Knowing is half the battle.mp4

06:36

00140 Holding your model back.mp4

04:22

00141 Imbalanced training sets.mp4

06:11

00142 Performance metrics.mp4

09:08

00143 High-dimensional vectors are different.mp4

04:55

00144 High-dimensional thinking.mp4

08:42

00145 High-dimensional indexing.mp4

04:34

More details

User Reviews

Rating

average 0

Focused display

Manning Publications

View courses Manning PublicationsManning Publications is an American publisher specializing in content relating to computers. Manning mainly publishes textbooks but also release videos and projects for professionals within the computing world.

- language english

- Training sessions 145

- duration 17:24:59

- Release Date 2023/11/06