Master Data Engineering using GCP Data Analytics

Durga Viswanatha Raju Gadiraju,Asasri Manthena

10:58:22

Description

Learn GCS for Data Lake, BigQuery for Data Warehouse, GCP Dataproc and Databricks for Big Data Pipelines

What You'll Learn?

- Data Engineering leveraging Services under GCP Data Analytics

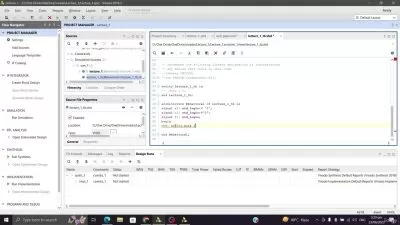

- Setup Development Environment using Visual Studio Code on Windows

- Building Data Lake using GCS

- Process Data in the Data Lake using Python and Pandas

- Build Data Warehouse using Google BigQuery

- Loading Data into Google BigQuery tables using Python and Pandas

- Setup Development Environment using Visual Studio Code on Google Dataproc with Remote Connection

- Big Data Processing or Data Engineering using Google Dataproc

- Run Spark SQL based applications as Dataproc Jobs using Commands

- Build Spark SQL based ELT Data Pipelines using Google Dataproc Workflow Templates

- Run or Instantiate ELT Data Pipelines or Dataproc Workflow Template using gcloud dataproc commands

- Big Data Processing or Data Engineering using Databricks on GCP

- Integration of GCS and Databricks on GCP

- Build and Run Spark based ELT Data Pipelines using Databricks Workflows on GCP

- Integration of Spark on Dataproc with Google BigQuery

- Build and Run Spark based ELT Pipeline using Google Dataproc Workflow Template with BigQuery Integration

Who is this for?

More details

DescriptionData Engineering is all about building Data Pipelines to get data from multiple sources into Data Lakes or Data Warehouses and then from Data Lakes or Data Warehouses to downstream systems. As part of this course, I will walk you through how to build Data Engineering Pipelines using GCP Data Analytics Stack. It includes services such as Google Cloud Storage, Google BigQuery, GCP Dataproc, Databricks on GCP, and many more.

As part of this course, first you will go ahead and setup environment to learn using VS Code on Windows and Mac.

Once the environment is ready, you need to sign up for Google Cloud Account. We will provide all the instructions to sign up for Google Cloud Account including reviewing billing as well as getting USD 300 Credit.

We typically use Cloud Object Storage as Data Lake. As part of this course, you will learn how to use Google Cloud Storage as Data Lake along with how to manage the files in Google Cloud Storage both by using commands as well as Python. It also covers, integration of Pandas with files in Google Cloud Storage.

GCPÂ provides RDBMS as service via Cloud SQL. You will learn how to setup Postgresql Database Server using Cloud SQL. Once the Database Server is setup, you will also take care of setting up required application database and user. You will also understand how to develop Python based applications by integrating with GCP Secretmanager to retrieve the credentials.

One of the key usage of Data is nothing but building reports and dashboards. Typically reports and dashboards are built using reporting tools pointing to Data Warehouse. As part of Google Data Analytics Services, BigQuery can be used as Data Warehouse. You will learn the features of BigQuery as a Data Warehouse along with key integrations using Python and Pandas.

At times, we need to process heavy volumes of data which also known as Big Data Processing. GCPÂ Dataproc is a fully manage Big Data Service with Hadoop, Spark, Kafka, etc. You will not only learn how to setup the GCPÂ Dataproc cluster, but also you will learn how to use single node Dataproc cluster for the development. You will setup development environment using VS Code with remote connection to the Dataproc Cluster.

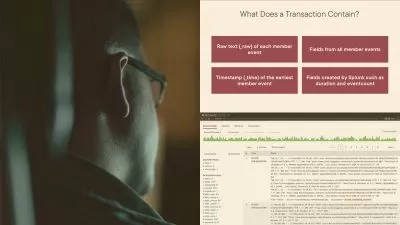

Once you understand how to get started with Big Data Processing using Dataproc, you will take care of building end to end ELT Data Pipelines using Dataproc Workflow Templates. You will learn all key commands to submit Dataproc Jobs as well as Workflows. You will end up building ELTÂ Pipelines using Spark SQL.

While Dataproc is GCPÂ Native Big Data Service, Databricks is another prominent Big Data Service available in GCP. You will also understand how to get started with Databricks on GCP.

Once you go through the details about how to get started with Databricks on GCP, you will take care of building end to end ELT Datapipelins using Databricks Jobs and Workflows.

Towards the end of the course you should be fairly comfortable with BigQuery for Data Warehouse and GCPÂ Dataproc for Data Processing, you will learn how to integrate these two key services by building end to end ELT Data Pipeline using Dataproc Workflow. You will also understand how to include Pyspark based application with Spark BigQuery connector as part of the Pipeline.

In the process of building Data Pipelines, you will also revise application development life cycle of Spark, troubleshooting issues related to the spark using relevant web interfaces such as YARNÂ Timeline Server, Spark UI, etc.

Who this course is for:

- Beginner or Intermediate Data Engineers who want to learn GCP Analytics Services for Data Engineering

- Intermediate Application Engineers who want to explore Data Engineering using GCP Analytics Services

- Data and Analytics Engineers who want to learn Data Engineering using GCP Analytics Services

- Testers who want to learn key skills to test Data Engineering applications built using AWS Analytics Services

Data Engineering is all about building Data Pipelines to get data from multiple sources into Data Lakes or Data Warehouses and then from Data Lakes or Data Warehouses to downstream systems. As part of this course, I will walk you through how to build Data Engineering Pipelines using GCP Data Analytics Stack. It includes services such as Google Cloud Storage, Google BigQuery, GCP Dataproc, Databricks on GCP, and many more.

As part of this course, first you will go ahead and setup environment to learn using VS Code on Windows and Mac.

Once the environment is ready, you need to sign up for Google Cloud Account. We will provide all the instructions to sign up for Google Cloud Account including reviewing billing as well as getting USD 300 Credit.

We typically use Cloud Object Storage as Data Lake. As part of this course, you will learn how to use Google Cloud Storage as Data Lake along with how to manage the files in Google Cloud Storage both by using commands as well as Python. It also covers, integration of Pandas with files in Google Cloud Storage.

GCPÂ provides RDBMS as service via Cloud SQL. You will learn how to setup Postgresql Database Server using Cloud SQL. Once the Database Server is setup, you will also take care of setting up required application database and user. You will also understand how to develop Python based applications by integrating with GCP Secretmanager to retrieve the credentials.

One of the key usage of Data is nothing but building reports and dashboards. Typically reports and dashboards are built using reporting tools pointing to Data Warehouse. As part of Google Data Analytics Services, BigQuery can be used as Data Warehouse. You will learn the features of BigQuery as a Data Warehouse along with key integrations using Python and Pandas.

At times, we need to process heavy volumes of data which also known as Big Data Processing. GCPÂ Dataproc is a fully manage Big Data Service with Hadoop, Spark, Kafka, etc. You will not only learn how to setup the GCPÂ Dataproc cluster, but also you will learn how to use single node Dataproc cluster for the development. You will setup development environment using VS Code with remote connection to the Dataproc Cluster.

Once you understand how to get started with Big Data Processing using Dataproc, you will take care of building end to end ELT Data Pipelines using Dataproc Workflow Templates. You will learn all key commands to submit Dataproc Jobs as well as Workflows. You will end up building ELTÂ Pipelines using Spark SQL.

While Dataproc is GCPÂ Native Big Data Service, Databricks is another prominent Big Data Service available in GCP. You will also understand how to get started with Databricks on GCP.

Once you go through the details about how to get started with Databricks on GCP, you will take care of building end to end ELT Datapipelins using Databricks Jobs and Workflows.

Towards the end of the course you should be fairly comfortable with BigQuery for Data Warehouse and GCPÂ Dataproc for Data Processing, you will learn how to integrate these two key services by building end to end ELT Data Pipeline using Dataproc Workflow. You will also understand how to include Pyspark based application with Spark BigQuery connector as part of the Pipeline.

In the process of building Data Pipelines, you will also revise application development life cycle of Spark, troubleshooting issues related to the spark using relevant web interfaces such as YARNÂ Timeline Server, Spark UI, etc.

Who this course is for:

- Beginner or Intermediate Data Engineers who want to learn GCP Analytics Services for Data Engineering

- Intermediate Application Engineers who want to explore Data Engineering using GCP Analytics Services

- Data and Analytics Engineers who want to learn Data Engineering using GCP Analytics Services

- Testers who want to learn key skills to test Data Engineering applications built using AWS Analytics Services

User Reviews

Rating

Durga Viswanatha Raju Gadiraju

Instructor's CoursesAsasri Manthena

Instructor's Courses

Udemy

View courses Udemy- language english

- Training sessions 152

- duration 10:58:22

- Release Date 2022/12/11