Machine Learning: Neural networks from scratch

Maxime Vandegar

4:54:46

Description

Implementation of neural networks from scratch (Python)

What You'll Learn?

- What are neural networks

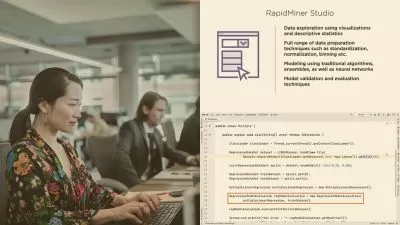

- Implement a neural network from scratch (Python, Java, C, ...)

- Training neural networks

- Activation functions and the universal approximation theorem

- Strengthen your knowledge in Machine Learning and Data Science

- Implementation tricks: Jacobian-Vector product & log-sum-exp trick

Who is this for?

What You Need to Know?

More details

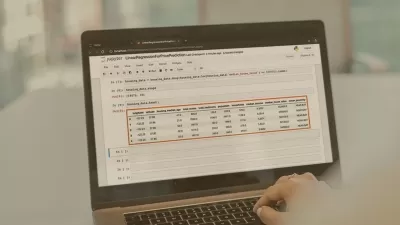

DescriptionIn this course, we will implement a neural network from scratch, without dedicated libraries. Although we will use the python programming language, at the end of this course, you will be able to implement a neural network in any programming language.

We will see how neural networks work intuitively, and then mathematically. We will also see some important tricks, which allow stabilizing the training of neural networks (log-sum-exp trick), and to prevent the memory used during training from growing exponentially (jacobian-vector product). Without these tricks, most neural networks could not be trained.

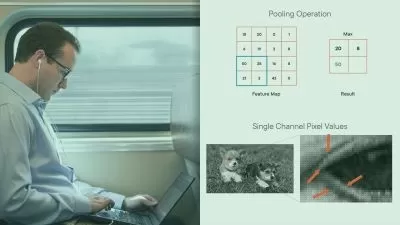

We will train our neural networks on real image classification and regression problems. To do so, we will implement different cost functions, as well as several activation functions.

This course is aimed at developers who would like to implement a neural network from scratch as well as those who want to understand how a neural network works from A to Z.

This course is taught using the Python programming language and requires basic programming skills. If you do not have the required background, I recommend that you brush up on your programming skills by taking a crash course in programming. It is also recommended that you have some knowledge of Algebra and Analysis to get the most out of this course.

Concepts covered :

Neural networks

Implementing neural networks from scratch

Gradient descent and Jacobian matrix

The creation of Modules that can be nested in order to create a complex neural architecture

The log-sum-exp trick

Jacobian vector product

Activation functions (ReLU, Softmax, LogSoftmax, ...)

Cost functions (MSELoss, NLLLoss, ...)

This course will be frequently updated, with the addition of bonuses.

Don't wait any longer before launching yourself into the world of machine learning!

Who this course is for:

- For developers who would like to implement a neural network without using dedicated libraries

- For those who study machine learning and would like to strengthen their knowledge about neural networks and automatic differentiation frameworks

- For those preparing for job interviews in data science

- To artificial intelligence enthusiasts

In this course, we will implement a neural network from scratch, without dedicated libraries. Although we will use the python programming language, at the end of this course, you will be able to implement a neural network in any programming language.

We will see how neural networks work intuitively, and then mathematically. We will also see some important tricks, which allow stabilizing the training of neural networks (log-sum-exp trick), and to prevent the memory used during training from growing exponentially (jacobian-vector product). Without these tricks, most neural networks could not be trained.

We will train our neural networks on real image classification and regression problems. To do so, we will implement different cost functions, as well as several activation functions.

This course is aimed at developers who would like to implement a neural network from scratch as well as those who want to understand how a neural network works from A to Z.

This course is taught using the Python programming language and requires basic programming skills. If you do not have the required background, I recommend that you brush up on your programming skills by taking a crash course in programming. It is also recommended that you have some knowledge of Algebra and Analysis to get the most out of this course.

Concepts covered :

Neural networks

Implementing neural networks from scratch

Gradient descent and Jacobian matrix

The creation of Modules that can be nested in order to create a complex neural architecture

The log-sum-exp trick

Jacobian vector product

Activation functions (ReLU, Softmax, LogSoftmax, ...)

Cost functions (MSELoss, NLLLoss, ...)

This course will be frequently updated, with the addition of bonuses.

Don't wait any longer before launching yourself into the world of machine learning!

Who this course is for:

- For developers who would like to implement a neural network without using dedicated libraries

- For those who study machine learning and would like to strengthen their knowledge about neural networks and automatic differentiation frameworks

- For those preparing for job interviews in data science

- To artificial intelligence enthusiasts

User Reviews

Rating

Maxime Vandegar

Instructor's Courses

Udemy

View courses Udemy- language english

- Training sessions 30

- duration 4:54:46

- Release Date 2022/11/17