Apache Spark 2.0 with Java -Learn Spark from a Big Data Guru

Tao W.,James Lee,Level Up,Jiarui Zhou

3:24:10

Description

Learn analyzing large data sets with Apache Spark by 10+ hands-on examples. Take your big data skills to the next level.

What You'll Learn?

- An overview of the architecture of Apache Spark.

- Work with Apache Spark's primary abstraction, resilient distributed datasets(RDDs) to process and analyze large data sets.

- Develop Apache Spark 2.0 applications using RDD transformations and actions and Spark SQL.

- Scale up Spark applications on a Hadoop YARN cluster through Amazon's Elastic MapReduce service.

- Analyze structured and semi-structured data using Datasets and DataFrames, and develop a thorough understanding about Spark SQL.

- Share information across different nodes on a Apache Spark cluster by broadcast variables and accumulators.

- Advanced techniques to optimize and tune Apache Spark jobs by partitioning, caching and persisting RDDs.

- Best practices of working with Apache Spark in the field.

Who is this for?

What You Need to Know?

More details

DescriptionWhat is this course about:

This course covers all the fundamentals about Apache Spark with Java and teaches you everything you need to know about developing Spark applications with Java. At the end of this course, you will gain in-depth knowledge about Apache Spark and general big data analysis and manipulations skills to help your company to adapt Apache Spark for building big data processing pipeline and data analytics applications.

This course covers 10+ hands-on big data examples. You will learn valuable knowledge about how to frame data analysis problems as Spark problems. Together we will learn examples such as aggregating NASA Apache web logs from different sources; we will explore the price trend by looking at the real estate data in California; we will write Spark applications to find out the median salary of developers in different countries through the Stack Overflow survey data; we will develop a system to analyze how maker spaces are distributed across different regions in the United Kingdom.  And much much more.

What will you learn from this lecture:

In particularly, you will learn:

An overview of the architecture of Apache Spark.

Develop Apache Spark 2.0 applications with Java using RDD transformations and actions and Spark SQL.

Work with Apache Spark's primary abstraction, resilient distributed datasets(RDDs) to process and analyze large data sets.

Deep dive into advanced techniques to optimize and tune Apache Spark jobs by partitioning, caching and persisting RDDs.

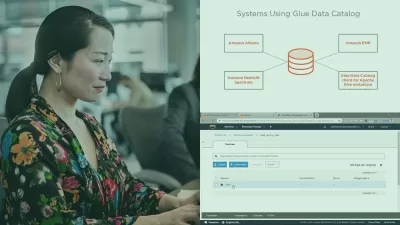

Scale up Spark applications on a Hadoop YARN cluster through Amazon's Elastic MapReduce service.

Analyze structured and semi-structured data using Datasets and DataFrames, and develop a thorough understanding of Spark SQL.

- Share information across different nodes on an Apache Spark cluster by broadcast variables and accumulators.

Best practices of working with Apache Spark in the field.

- Big data ecosystem overview.

Why shall we learn Apache Spark:

Apache Spark gives us unlimited ability to build cutting-edge applications. It is also one of the most compelling technologies of the last decade in terms of its disruption to the big data world.

Spark provides in-memory cluster computing which greatly boosts the speed of iterative algorithms and interactive data mining tasks.

Apache Spark is the next-generation processing engine for big data.

Tons of companies are adapting Apache Spark to extract meaning from massive data sets, today you have access to that same big data technology right on your desktop.

Apache Spark is becoming a must tool for big data engineers and data scientists.

About the author:

Since 2015, James has been helping his company to adapt Apache Spark for building their big data processing pipeline and data analytics applications.

James' company has gained massive benefits by adapting Apache Spark in production. In this course, he is going to share with you his years of knowledge and best practices of working with Spark in the real field.

Why choosing this course?

This course is very hands-on, James has put lots effort to provide you with not only the theory but also real-life examples of developing Spark applications that you can try out on your own laptop.

James has uploaded all the source code to Github and you will be able to follow along with either Windows, MAC OS or Linux.

In the end of this course, James is confident that you will gain in-depth knowledge about Spark and general big data analysis and data manipulation skills. You'll be able to develop Spark application that analyzes Gigabytes scale of data both on your laptop, and in the cloud using Amazon's Elastic MapReduce service!

30-day Money-back Guarantee!

You will get 30-day money-back guarantee from Udemy for this course.

If not satisfied simply ask for a refund within 30 days. You will get a full refund. No questions whatsoever asked.

Are you ready to take your big data analysis skills and career to the next level, take this course now!

You will go from zero to Spark hero in 4 hours.

Who this course is for:

- Anyone who want to fully understand how Apache Spark technology works and learn how Apache Spark is being used in the field.

- Software engineers who want to develop Apache Spark 2.0 applications using Spark Core and Spark SQL.

- Data scientists or data engineers who want to advance their career by improving their big data processing skills.

What is this course about:

This course covers all the fundamentals about Apache Spark with Java and teaches you everything you need to know about developing Spark applications with Java. At the end of this course, you will gain in-depth knowledge about Apache Spark and general big data analysis and manipulations skills to help your company to adapt Apache Spark for building big data processing pipeline and data analytics applications.

This course covers 10+ hands-on big data examples. You will learn valuable knowledge about how to frame data analysis problems as Spark problems. Together we will learn examples such as aggregating NASA Apache web logs from different sources; we will explore the price trend by looking at the real estate data in California; we will write Spark applications to find out the median salary of developers in different countries through the Stack Overflow survey data; we will develop a system to analyze how maker spaces are distributed across different regions in the United Kingdom.  And much much more.

What will you learn from this lecture:

In particularly, you will learn:

An overview of the architecture of Apache Spark.

Develop Apache Spark 2.0 applications with Java using RDD transformations and actions and Spark SQL.

Work with Apache Spark's primary abstraction, resilient distributed datasets(RDDs) to process and analyze large data sets.

Deep dive into advanced techniques to optimize and tune Apache Spark jobs by partitioning, caching and persisting RDDs.

Scale up Spark applications on a Hadoop YARN cluster through Amazon's Elastic MapReduce service.

Analyze structured and semi-structured data using Datasets and DataFrames, and develop a thorough understanding of Spark SQL.

- Share information across different nodes on an Apache Spark cluster by broadcast variables and accumulators.

Best practices of working with Apache Spark in the field.

- Big data ecosystem overview.

Why shall we learn Apache Spark:

Apache Spark gives us unlimited ability to build cutting-edge applications. It is also one of the most compelling technologies of the last decade in terms of its disruption to the big data world.

Spark provides in-memory cluster computing which greatly boosts the speed of iterative algorithms and interactive data mining tasks.

Apache Spark is the next-generation processing engine for big data.

Tons of companies are adapting Apache Spark to extract meaning from massive data sets, today you have access to that same big data technology right on your desktop.

Apache Spark is becoming a must tool for big data engineers and data scientists.

About the author:

Since 2015, James has been helping his company to adapt Apache Spark for building their big data processing pipeline and data analytics applications.

James' company has gained massive benefits by adapting Apache Spark in production. In this course, he is going to share with you his years of knowledge and best practices of working with Spark in the real field.

Why choosing this course?

This course is very hands-on, James has put lots effort to provide you with not only the theory but also real-life examples of developing Spark applications that you can try out on your own laptop.

James has uploaded all the source code to Github and you will be able to follow along with either Windows, MAC OS or Linux.

In the end of this course, James is confident that you will gain in-depth knowledge about Spark and general big data analysis and data manipulation skills. You'll be able to develop Spark application that analyzes Gigabytes scale of data both on your laptop, and in the cloud using Amazon's Elastic MapReduce service!

30-day Money-back Guarantee!

You will get 30-day money-back guarantee from Udemy for this course.

If not satisfied simply ask for a refund within 30 days. You will get a full refund. No questions whatsoever asked.

Are you ready to take your big data analysis skills and career to the next level, take this course now!

You will go from zero to Spark hero in 4 hours.

Who this course is for:

- Anyone who want to fully understand how Apache Spark technology works and learn how Apache Spark is being used in the field.

- Software engineers who want to develop Apache Spark 2.0 applications using Spark Core and Spark SQL.

- Data scientists or data engineers who want to advance their career by improving their big data processing skills.

User Reviews

Rating

Tao W.

Instructor's CoursesJames Lee

Instructor's CoursesLevel Up

Instructor's CoursesJiarui Zhou

Instructor's Courses

Udemy

View courses Udemy- language english

- Training sessions 45

- duration 3:24:10

- English subtitles has

- Release Date 2024/04/28